- Details

- Published: 09 January 2013

Augmented reality (AR) aims at enhancing the perception of or interaction with the real world through computer-generated content. Azuma et al. define AR as a variation of virtual reality, with the following properties [1]:

1) combines real and virtual objects in a real environment;

2) runs interactively, and in real time;

3) registers (aligns) real and virtual objects with each other.

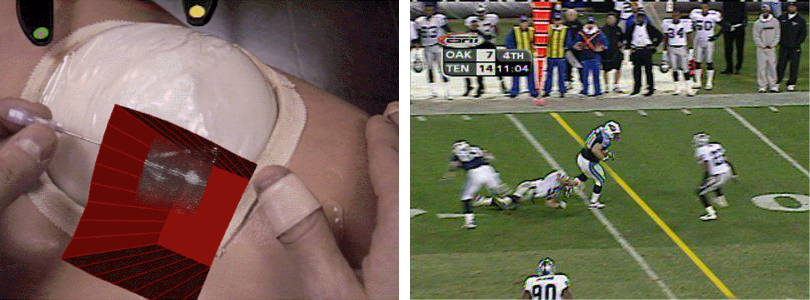

AR is applied in a variety of areas, including industrial maintenance, medicine, and television (see images below), and often uses visual augmentation.

Audio AR, on the other hand, employs computer-generated audio and sounds to extend or enhance the perception of the real world. Application areas for audio AR include navigation, gaming, and social networking.

Research on audio augmented reality applications at Aalto

Our research on audio augmented reality applications focusses on telecommunication scenarios. Main component of our audio augmented reality setup is the ARA headset, consisting of a binaural headset and equalisation mixer (see Audio augmented reality technology). We have developed a method to process binaural recordings obtained from the ARA headset for use in a teleconference setup [5]. In [6], we demonstrate a simple method to acquire a binaural room impulse response that can be used to position a sound source (e.g., person calling via phone) virtually in the user's room. From the ARA headset signal, we are able to track the head orientation and position of participants in a conference setup [7,8].

To study various ways of encoding information into sound, we have compared speech vs. nonspeech, and headphones vs. loudspeaker playback, as well as various degrees of temporal compression [9]. We implemented efficient rendering of audio for augmented reality via a framework for interpolating head-related transfer functions in real-time [10,11].

References

[1] Azuma, R., Baillot, Y., Behringer, R., Feiner, S., Julier, S., and MacIntyre, B. (2001). Recent advances in augmented reality. Computer Graphics and Applications, IEEE, 21(6), pp. 34–47.

[2] State, Andrei, Mark A. Livingston, Gentaro Hirota, William F. Garrett, Mary C. Whitton, Henry Fuchs, and Etta D. Pisano (1996). Technologies for Augmented-Reality Systems: realizing Ultrasound-Guided Needle Biopsies. In Proc. SIGGRAPH 96 , New Orleans, LA, August 4-9, 1996, ACM SIGGRAPH, pp. 439–446.

[3] Rozier, J., Karahalios, K., and Donath, J. (2000). Hear&there: An augmented reality system of linked audio. In Proceedings of the International Conference on Auditory Display (ICAD), pp. 63–67.

[4] Zimmermann, A. and Lorenz, A. (2008). LISTEN: a user- adaptive audio-augmented museum guide. User Modeling and User-Adapted Interaction, 18(5), pp. 389–416.

[5] Gamper, H. and Lokki, T. (2010). Audio Augmented Reality in Telecommunication through Virtual Auditory Display. In Proceedings of the International Conference on Auditory Display (ICAD), 2010, pp. 63–71.

[pdf]

[pdf]  [bibtex]

[bibtex]  [abstract]

[abstract]

@INPROCEEDINGS{gam10,

author = {Gamper, H. and Lokki, T.},

title = {Audio augmented reality in telecommunication through virtual auditory display},

booktitle = {The 16th International Conference on Auditory Display (ICAD-2010)},

year = {2010},

pages = {63--71},

address = {Washington, D.C, USA},

month = {June 9--15},

keywords = {Augmented reality}

}

Audio communication in its most natural form, the face-to-face conversation, is binaural. Current telecommunication systems often provide only monaural audio, stripping it of spatial cues and thus deteriorating listening comfort and speech intelligibility. In this work, the application of binaural audio in telecommunication through audio augmented reality (AAR) is presented. AAR aims at augmenting auditory perception by embedding spatialised virtual audio content. Used in a telecommunication system, AAR enhances intelligibility and the sense of presence of the user. As a sample use case of AAR, a teleconference scenario is devised. The conference is recorded through a headset with integrated microphones, worn by one of the conference participants. Algorithms are presented to compensate for head movements and restore the spatial cues that encode the perceived directions of the conferees. To analyse the performance of the AAR system, a user study was conducted. Processing the binaural recording with the proposed algorithms places the virtual speakers at fixed directions. This improved the ability of test subjects to segregate the speakers significantly compared to an unprocessed recording. The proposed AAR system outperforms conventional telecommunication systems in terms of the speaker segregation by supporting spatial separation of binaurally recorded speakers.

[6] Gamper, H. and Lokki, T. (2011). Spatialisation in audio augmented reality using finger snaps. Principles and Applications of Spatial Hearing, World Scientific Publishing, 2011, pp. 383–392. [pdf]

[pdf]  [bibtex]

[bibtex]  [abstract]

[abstract]

@INPROCEEDINGS{gam11iwpash,

author = {Gamper, H. and Lokki, T.},

title = {Spatialisation in audio augmented reality using finger snaps},

booktitle = {Principles and Applications of Spatial Hearing},

editor = {Yoiti Suzuki and Douglas Brungart and Hiroaki Kato},

year = {2011},

pages = {383--392},

publisher = {World Scientific Publishing},

keywords = {Augmented reality}

}

In audio augmented reality (AAR) information is embedded into the user's surroundings by enhancing the real audio scene with virtual auditory events. To maximize their embeddedness and naturalness they can be processed with the user's head-related impulse responses (HRIRs). The HRIRs including early (room) reflections can be obtained from transients in the signals of ear-plugged microphones worn by the user, referred to as instant binaural room impulse responses (BRIRs). Those can be applied on-the-fly to virtual sounds played back through the earphones. With the presented method, clapping or finger snapping allows for instant capturing of BRIR, thus for intuitive positioning and reasonable externalisation of virtual sounds in enclosed spaces, at low hardware and computational costs.

[7] Gamper, H., Tervo, S. and Lokki, T. (2011). Head orientation tracking using binaural headset microphones. In Proc. 131st Audio Engineering Society (AES) Convention, New York, NY, USA, 2011, paper no. 8538. [pdf]

[pdf]  [bibtex]

[bibtex]  [abstract]

[abstract]

@INPROCEEDINGS{gam11aes,

author = {Gamper, H. and Tervo, S. and Lokki, T.},

title = {Head orientation tracking using binaural headset microphones},

booktitle = {Proc. 131st Audio Engineering Society (AES) Convention},

year = {2011},

note = {Paper no. 8538},

address = {New York, NY, USA},

month = {October 20--23},

keywords = {Augmented reality}

}

A head orientation tracking system using binaural headset microphones is proposed. Unlike previous approaches, the proposed method does not require anchor sources, but relies on speech signals of the wearers of the binaural headsets. From the binaural microphone signals, time of arrival (TOA) and time difference of arrival (TDOA) estimates are obtained. The tracking is performed using a particle filter integrated with a maximum likelihood estimation function. In a case study, the proposed method is used to track the head orientations of three conferees in a meeting scenario. With an accuracy of about 10 degrees, the proposed method is shown to outperform a reference method which achieves an accuracy of about 35 degrees.

[8] Gamper, H., Tervo, S. and Lokki, T. (2012). Speaker tracking for teleconferencing via binaural headset microphones. In Proc. 13th Int. Workshop on Acoustic Signal Enhancement (IWAENC), Aachen, Germany, 2012. [pdf]

[pdf]  [bibtex]

[bibtex]  [abstract]

[abstract]

@INPROCEEDINGS{gam12iwaenc,

author = {Gamper, H. and Tervo, S. and Lokki, T.},

title = {Speaker tracking for teleconferencing via binaural headset microphones},

booktitle = {Proc. 13th Int. Workshop on Acoustic Signal Enhancement (IWAENC)},

year = {2012},

address = {Aachen, Germany},

month = {September 4--6},

keywords = {Augmented reality}

}

Speaker tracking for teleconferencing via user-worn binaural headset microphones in combination with a reference microphone array is proposed. The tracking is implemented with particle filtering based on maximum likelihood estimation of time-difference of arrival (TDOA) estimates. An importance function for prior weighting of the particles of silent conferees (i.e., “listeners”) is proposed. Experimental results from tracking three conferees in a meeting scenario are presented. The use of user-worn microphones in addition to a reference microphone array is shown to improve speaker distance estimation and overall tracking performance substantially. The importance function improved the tracking RMSE by 58% on average. The position tracking RMSE of the proposed method is about 0.11 m.

[9] Gamper, H., Dicke, C., Billinghurst, M. and Puolamäki, K. (2013). Sound sample detection and numerosity estimation using auditory display. ACM Transactions on Applied Perception, 10, pp. 1-18. [pdf]

[pdf]  [bibtex]

[bibtex]  [abstract]

[abstract]

@ARTICLE{Gamper2013,

author = {Gamper, H. and Dicke, C. and Billinghurst, M. and Puolam\"{a}ki,

K.},

title = {Sound sample detection and numerosity estimation using auditory display},

journal = {ACM Transactions on Applied Perception},

year = {2013},

volume = {10},

pages = {1--18},

doi = {http://doi.acm.org/10.1145/2422105.2422109},

issue = {1},

keywords = {Augmented reality},

url = {https://mediatech.aalto.fi/~hannes/admin/download.php?download=Sound_sample_detection_and_numerosity_estimation_using_auditory_display_TAP2013.pdf}

}

This paper investigates the effect of various design parameters of auditory information display on user performance in two basic information retrieval tasks. We conducted a user test with 22 participants in which sets of sound samples were presented. In the first task, the test participants were asked to detect a given sample among a set of samples. In the second task, the test participants were asked to estimate the relative number of instances of a given sample in two sets of samples. We found that the stimulus onset asynchrony (SOA) of the sound samples had a significant effect on user performance in both tasks. For the sample detection task, the average error rate was about 10% with an SOA of 100 ms. For the numerosity estimation task, an SOA of at least 200 ms was necessary to yield average error rates lower than 30%. Other parameters, including the samples’ sound type (synthesised speech or earcons) and spatial quality (multichannel loudspeaker or diotic headphone playback), had no substantial effect on user performance. These results suggest that diotic or indeed monophonic playback with appropriately chosen SOA may be sufficient in practical applications for users to perform the given information retrieval tasks, if information about the sample location is not relevant. If location information was provided through spatial playback of the samples, test subjects were able to simultaneously detect and localise a sample with reasonable accuracy.

[10] Gamper, H. (2013). Selection and interpolation of head-related transfer functions for rendering moving virtual sound sources. In Proc. Int. Conf. Digital Audio Effects (DAFx), Maynooth, Ireland. [pdf]

[pdf]  [bibtex]

[bibtex]  [abstract]

[abstract]

@INPROCEEDINGS{Gamper2013b,

author = {H. Gamper},

title = {Selection and interpolation of head-related transfer functions for

rendering moving virtual sound sources},

booktitle = {Proc. Int. Conf. Digital Audio Effects (DAFx)},

year = {2013},

address = {Maynooth, Ireland},

url = {https://mediatech.aalto.fi/~hannes/admin/download.php?download=Selection_and_interpolation_of_head_related_transfer_functions_Gamper_DAFx_2013.pdf}

}

A variety of approaches have been proposed previously to interpolate head-related transfer functions (HRTFs). However, relatively little attention has been given to the way a suitable set of HRTFs is chosen for interpolation and to the calculation of the interpolation weights. This paper presents an efficient and robust way to select a minimal set of HRTFs and to calculate appropriate weights for interpolation. The proposed method is based on grouping HRTF measurement points into non-overlapping triangles on the surface of a sphere by calculating the convex hull. The resulting Delaunay triangulation maximises minimum angles. For interpolation, the HRTF triangle that is intersected by the desired sound source vector is selected. The selection is based on a point-in-triangle test than can be performed using just 9 multiplications and 6 additions per triangle. A further improvement of the selection process is achieved by sorting the HRTF triangles according to their distance from the sound source vector prior to performing the point-in-triangle tests. The HRTFs of the selected triangle are interpolated using weights derived from vector-base amplitude panning, with appropriate normalisation. The proposed method is compared to state-of-the-art methods. It is shown to be robust with respect to irregularities in the HRTF measurement grid and to be well-suited for rendering moving virtual sources.

[11] Gamper, H. (2013). Head-related transfer function interpolation in azimuth, elevation, and distance. Journal of the Acoustical Society of America (JASA), 134(6), pp. EL547-EL554. [pdf]

[pdf]  [bibtex]

[bibtex]  [abstract]

[abstract]

@ARTICLE{Gamper2013a,

author = {Hannes Gamper},

title = {Head-related transfer function interpolation in azimuth, elevation,

and distance},

journal = {J. Acoust. Soc. America},

year = {2013},

volume = {134},

pages = {547--554},

number = {6},

month = {December},

note = {MATLAB demonstration of the algorithm: \url{http://www.mathworks.com/matlabcentral/fileexchange/43809}},

doi = {10.1121/1.4828983},

keywords = {Augmented reality,Auralization,HRTF,3-D,interpolation},

owner = {hannes},

timestamp = {2013.08.20},

url = {https://mediatech.aalto.fi/~hannes/admin/download.php?download=http://link.aip.org/link/?JAS/134/EL547/1}

}

Although distance-dependent head-related transfer function (HRTF) databases provide interesting possibilities, e.g., for rendering virtual sounds in the near-field, there is a lack of algorithms and tools to make use of them. Here, a framework is proposed for interpolating HRTF measurements in 3-D (i.e., azimuth, elevation, and distance) using tetrahedral interpolation with barycentric weights. For interpolation, a tetrahedral mesh is generated via Delaunay triangulation and searched via an adjacency walk, making the framework robust with respect to irregularly positioned HRTF measurements and computationally efficient. An objective evaluation of the proposed framework indicates good accordance between measured and interpolated near-field HRTFs.