- Details

- Published: 09 January 2013

Augmented reality applications require that users perceive both the environment around them as well as virtual objects added to it. In audio-augmented reality, this can be realized using two different basic approaches: "acoustic-hear-through" (or hear-through) augmented reality and "microphone-hear-through" (or mic-through) techniques [1]. Hear-through AR can be achieved using, e.g., bone-conduction headphones, which do not attenuate sounds from the surroundings. The headset used in mic-through AR attenuates sounds from the surroundings, but has microphones attached to both earpieces. The microphone signals are mixed with virtual sounds and played through the headphones. This way, the acoustic environment is perceived unattenuated.

Although using bone-conduction headphones to render virtual sound sources allows one to hear natural sounds unattenuated and without loss of quality, using mic-through techniques has its advantages. Firstly, reproducing the natural acoustic environment using headphones allows the listener to either attenuate the level of natural sounds, when desired, or alternatively amplify those sounds. Even more advanced modifications of the perceived acoustic environment are possible, but restricted by the latency introduced in the process. Secondly, the signals from the binaural microphones can be used for many interesting applications. The sound quality of bone-conduction headphones is also in general inferior to that of conventional headphones. Using headphones for reproducing both the natural acoustic environment and sound events that augment it presumably results in a better integration of natural and virtual sounds.

Research on audio augmented reality technology at Aalto

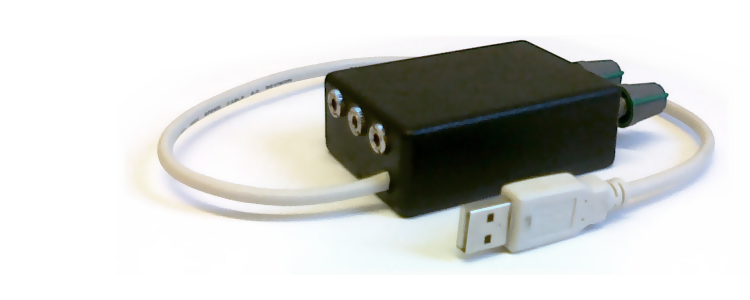

The Department of Media Technology and the Department of Signal Processing and Acoustics at Aalto University have together with Nokia Research Center done research on mic-through augmented reality technology, using insert-type headphones with binaural microphones. As the headphones block the ear canals, a half-wavelength resonance forms instead of the quarter-wavelength resonance of the open ear canals. The microphones signals must thus be equalized to compensate for the changed resonances, in addition to the leakage of low-frequency sound past the headphones [2]. The original augmented reality audio mixer and equalizer presented in [2] has been further developed to allow both audio input and output as well as power through USB [3].

References

[1] R. Lindeman, H. Noma, and P. De Barros, Hear-through and mic-through augmented reality: Using bone conduction to display spatialized audio, in 6th International Symposium on Mixed and Augmented Reality (ISMAR 2007), pp. 173-176, Nara, Japan, Nov. 13–16, 2007.

[2] Riikonen, V., Tikander M., and Karjalainen M., An Augmented Reality Audio Mixer and Equalizer, in AES 124th Convention, Amsterdam, the Netherlands, May 17-20, 2008.

[3] Albrecht, R., Lokki, T., and Savioja, L., "A mobile augmented reality audio system with binaural microphones," in Interacting with sound workshop (IwS'11), pp. 7-11, Stockholm, Sweden, August 30, 2011.